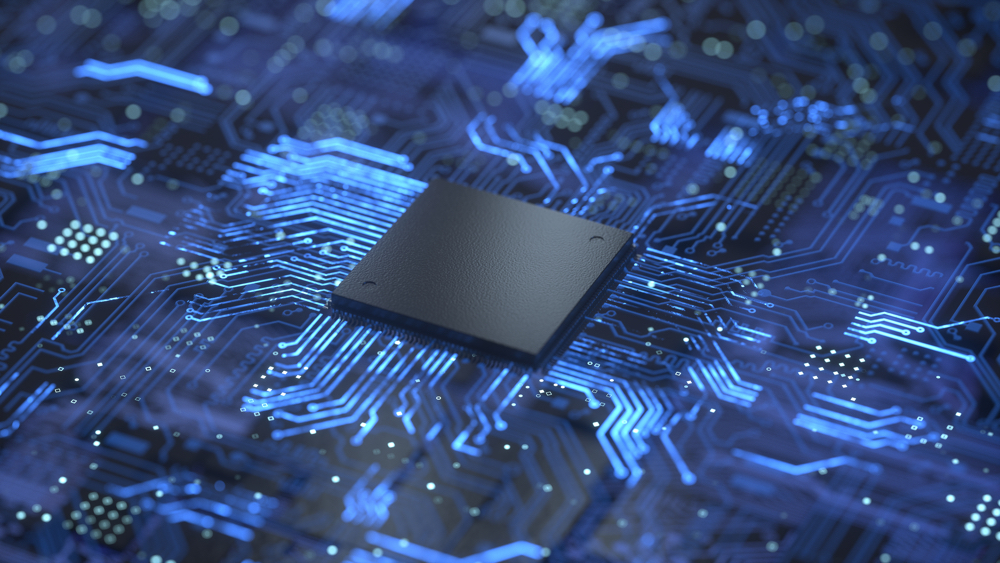

In System-on-Chip (SoC) development, architects and designers juggle various design parameters as they push beyond the device performance achieved last time. And now, the increasing variety of use cases and software technologies are fundamentally influencing processing architectures.

They are striving to keep one foot in the present, in terms of usability, manufacturability and testability, carrying both customers and ecosystem with them. And they are firmly planting their other foot in an era of domain-specific architectures, encompassing new approaches to processing, memory and I/O.

This will in turn influence later, more standardised architectures.

What has not changed, is the demand for further performance. And improving overall system performance requires improvements to processors, I/O and memory.